Interview with Barnabas Takacs from FFP Productions about the development of photorealistic digital twins for entertainment.

For the last few years, I have been writing about grief and digital afterlife technology and the companies that create photorealistic digital twins, chatbots, synthetic voices, AI video legacies, and holograms. The entertainment industry has been a driver of innovation, creating digital personas of Tupak Shakur and ABBA. In only the last few weeks, Elvis, Edith Piaf, and Jimmy Stewart have been resurrected or de-aged for entertainment. Harrison Ford was de-aged by 40 years for his role as Indiana Jones in last year’s Indian Jones and the Dial of Destiny.

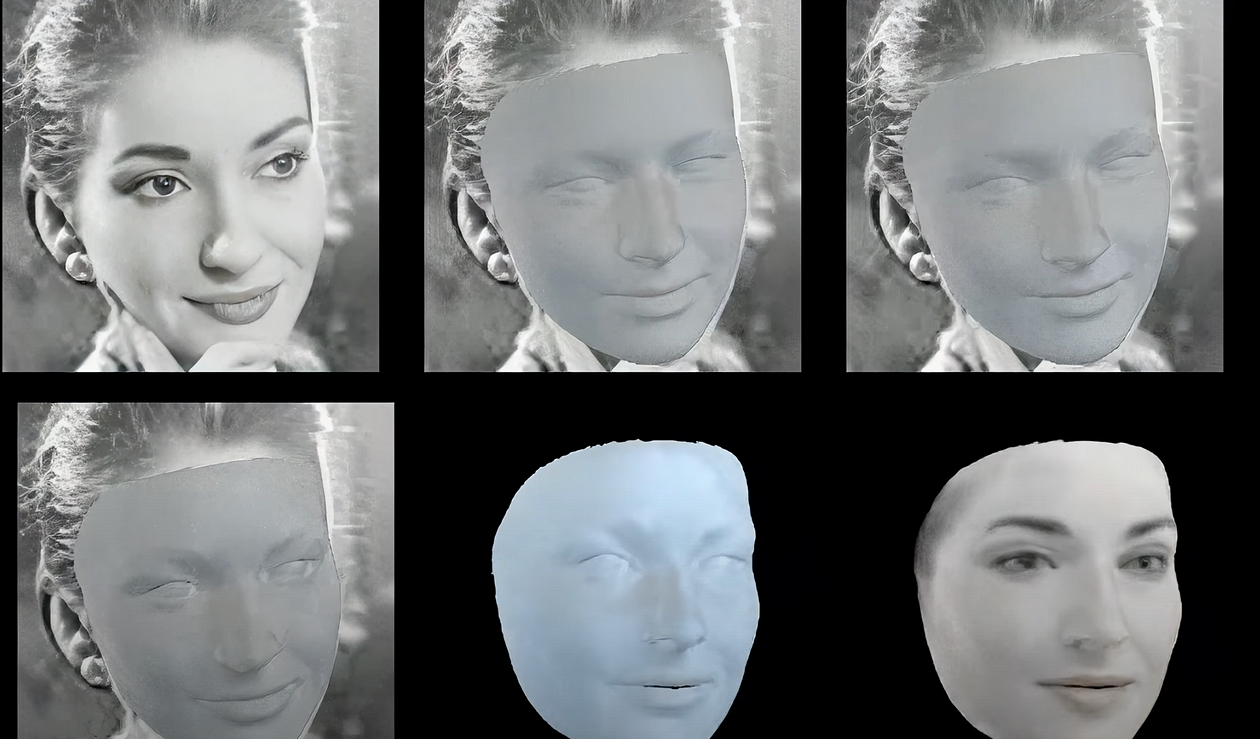

Opera singer Maria Callas, who died in 1977, was one of the biggest stars of the 20th century and her life and work still resonates today in numerous documentaries and music releases. Her likeness has been recreated with AI technology as part of an ongoing XReco European project. Vienna-based production company FFP also recreated the interior of the Budapest Opera House where Hollywood actor, Angelina Jolie recently filmed scenes for a new film Maria about the opera singer’s life. Her synthetic image was reconstructed from 50+ internet images, generative AI, AI-based shape estimation methods, traditional view-based 3D modeling, and a trained Stable Diffusion model to pose Callas from any angle. The project aims to push new groundbreaking technology while introducing Callas to a contemporary audience.

The project explores 3D production technologies and different motion capture technology tools to build synthetic humans that can accurately and realistically replicate human movements, facial expressions, and voice, to create and publish more believable and realistic characters. Bringing past stars, and performers to life for a contemporary audience, that engages with the past, in an immersive way.

I spoke to Barnabas Takacs, PhD, Head of R&D, Special Projects at FFP Productions about the Maria Callas project and how developments have evolved over the last few decades. Takacs has more than 20 years of experience in research and development across multiple industries using AI/Deep Learning Neural Nets, computer graphics, and 3D modeling/animation tools for virtual humans.

Maria Callas — watch the video

Full Interview with Barnabas Takacs Ph.D.

Ginger Liu: FFP Productions has recreated Maria Callas to celebrate her 100th birthday, which ties in with a new Angelina Jollie film about the singer’s life, called Maria. I was surprised that low-resolution images from the internet were used in the process of recreating her likeness. Callas is another global deceased star getting the AI treatment. Do you think the desire to see or hear stars like Edith Piaf and Callas helps or hinders their reputation? What kind of permission concerning copyright, did you require to recreate Callas?

Barnabas Takacs: Legal aspects and permissions from family members are indeed a key element regarding the future of these technologies. But it was never overlooked. Twenty — five years ago, when I was first involved in a similar project, we were pushing the boundaries of technology — Computer Graphics at that time — to see if a photo-real version of Marlene Dietrich, the iconic German film star, could be resurrected. The family (Peter Riva) was very much involved and the licensing arm (Global Icons) of the film company I worked for, explored very deeply the legal landscape. I vividly remember discussions centering on copyright, the right to one’s likeness, the right to publicity, etc. Some of these are somewhat universal, others vary from state to state even within the U.S.

Enter AI, and today we are facing the same type of discussions again, but in some sense in an even more urgent manner to avoid chaos and crisis. While film technology progressed to the level that it can now deliver photo-real synthetic humans even interactively (Unity3D’s Ziva, Unreal’s MetaHuman), it still takes a lot of artistic talent, 3D modeling, strong technical skills, and, of course, capital to develop them. This helped keep the creative processes and the use of these virtual human characters under legal and family control. AI, however, democratized these possibilities and gave access to faster, economically more viable alternative production methodologies that run on most mid-level graphics cards anybody could have at home.

It started just a few years ago with Generative Adversarial Networks (GANs) and Deep Fakes as the first AI techniques producing indistinguishable digital replicas of celebrities, like Morgan Freeman. Today Generative AI, and particularly Stable Diffusion (the method we used in our Maria Callas demo), allows us to “re-imagine” a person, from any angle, with any hairstyle and wearing any dress, while placing them in practically any environment just with the power of words. By translating images to words (CLIP), then transforming those words by capturing their essence and carrying new meaning (LLM & ChatGPT) before turning them back to images again (Generative AI), we created a very powerful creative pipeline that anybody can use.

If we use these advanced capabilities to create genuine photographic renderings of a face, we can import them back to the 3D film pipeline by estimating their head shape and layering the extra information needed for virtual production. But perhaps more importantly, these diffusion models, which were trained on billions of images, are now available in the public domain, so anyone can use them to experiment with. Artist communities are in vivid exchange on how to best achieve their goals using these new types of tools.

For Maria Callas, we needed a method that could re-imagine her very own likeness and that was not yet available, but the tools to retrain, or more precisely “fine tune” the Stable Diffusion models were. That is where internet images came into the picture. We collected around 50 of them and used them to achieve the goals you saw.

It is important to emphasize that this work was part of a European research project, called XReco, and it is a use-case demonstration advancing science in the Media Sector, not a commercial project. That is why we did not need to seek permission, it is a tribute to her greatness and a powerful demonstration of the technology behind raising awareness. Our project also actively studies the legal implications of NeRFs, TDM, and Copyright issues consistent with the Digital Single Market directives.

Related Links:

Ginger Liu: Your company was one of the first to recreate digital clones back in 1999 and has been creating computer graphics to reenact humans after their deaths. Could you share highlights of projects over the years and how the technology has evolved? I was interested to see how Marlene Dietrich was recreated and what you were trying to achieve.

Barnabas Takacs: The motivation behind the development of our Digital Cloning System (DCS) was to create a 3D, realistic “human” digital character that appears on screen with the look and feel of a live actor, in this case, Marlene Dietrich. To achieve this, we needed to innovate on many aspects of the CG pipeline. To start with there were no standard modeling techniques or topologies optimized for facial expressions and speech at that time. While we used digital sculpting tools, we also created a clay model of the actress for reference and scanning partly for legal reasons too, based on the set of reference photos we got from the family. Her grandson was actively involved in all this, we did not want to create anything that would have been inconsistent with the late star’s wishes. She controlled her public image very carefully and had many different looks. So even selecting the reference material was not as simple as it sounds today.

Next, we had to make this model move so we created a state-of-the-art facial tracking and animation system to follow the motion of an actor on a live set (without a cumbersome motion capture suit) and drive the movement of Marlene’s 3D digital character. That was mostly the part I was working on with a small team, due to my background in face recognition and tracking methods I did part of my Ph.D. thesis a few years before.

There was no AI-based Voice Cloning back then, so we used a voice talent to imitate her voice and captured her performance to start the process. To translate the raw captured motion we needed to craft special-purpose bone systems and temporal deformation controllers (TDC) to algorithmically estimate the parameters of a 3D Morphable Head Model (3DMM). We also worked towards developing an optimized surface deformation transfer technique, studied muscle movement underlying the skin via Finite Element Modeling of the facial tissue with the help of experts from Harvard University, and wrote novel shaders that imitated human skin interacting with light with the aid of layers in diverse lighting diffusion and subsurface scattering.

The final step was to put it all together and composite it back into the live action. Our final output was black and white to remain consistent with Dietrich’s original looks, but another Hollywood company (Pacific Title Mirage) under the lead of Dr. Ivan Gulas and Dr. Mark Sagar has managed to develop excellent-looking photo-real people as well. We were competitors, but also good friends trying to solve the many scientific and research challenges facing us. Many of these tools are still in use today in production houses, game studios, or indie artists, and even remained a hot topic for research papers in an attempt to build personalized photo-real avatars for other new markets (Colossyan).

Related Links:

PacTitle/Mirage Dr. Ivan Gulas

Ginger Liu: The entertainment industry has always driven technological innovation. You mention neural renderings and diffusion models replacing 3D modeling. With AI, it looks like media-making is truly in the hands of everyone and this has repercussions for those working in the industry. Do you think it will be a case of creative retraining or do you see job losses?

Barnabas Takacs: The way AI models are trained is similar to how our brain learns to see. Our vision system develops over many years and so do the billions of images AI has seen on the internet allowing it literally to dream up and envision new pictures of practically anything. In some sense “it” has traveled around the world and has seen it all, but works with a much better recall rate than our human mind does. Once a neural network is trained it produces output for anything going in. It is a very simple architecture. The information entering the first layer is quickly propagated through and some output eventually will be always produced. Simply put, in complete darkness or low-noise conditions, it will start hallucinating. In a way, this is very similar to sensory deprivation. Furthermore, parts of those images our AI systems have seen, were 3D renderings from CAD models, games, and multi-view data sets, therefore allowing AI to imagine and generate 3D models as well. In psychology this is called mental rotation, the ability to imagine how an object that has been seen from one perspective would look if it were rotated in space into a new orientation and viewed from a new perspective.

When we put it all together we can guide this process of iterative hallucination to resolve noise into images (Stable Diffusion) with low-level hints using both verbal instructions (prompt engineering) or images for guidance (ControlNet). We can also retrain and modify it for our own creative and artistic purposes. So YES, the tools and the means of media-making are changing and they require a bit of creative retraining and learning. It will not, however, lead to job losses … quite the contrary. It will create novel opportunities and challenges for many new generations to come. In my view, the future of the Metaverse lies in AI-generated 3D-looking, but just imagined (not rendered) diffusion model content, that is generated on-demand, and evolves in real-time based on our wishes and desires. But if we each live our virtual dream worlds we may end up being lonelier than ever. Therefore we need to look into and understand our responsibilities as a society, a process that will introduce many more layers and create new jobs as well.

Related Links:

Ginger Liu: As the technology stands, it is still possible to tell that your Maria Callas is not real. How far off is the uncanny valley or do you think in our digitally mediated virtual worlds that it is already here? And is that exciting or troubling for you as creators?

Barnabas Takacs: There is still a long way to go. Our efforts within the Xreco project focused on research and aimed to showcase, how a new era of creative tools will be created and used by a community of artists and new services can emerge in an XR ecosystem. I think this is a very exciting proposition for creators and offers many new opportunities for them. At the same time, it is understandable if someone feels a bit troubled as we do not yet fully understand or even grasp the implications of such technologies.

When I did a generative AI art project (Infinite Abstract) less than two years ago nobody in the general public heard of Generative AI or Large Language Models yet. A few months later MidJourney and ChatGPT became household names and since then, we have gone through multiple iterations of new tools and hundreds of start-up companies entering this space. Diffusion Models still suffer from temporal consistency problems, in other words, it is hard to create consistent-looking animations and moving video sequences. But that is already around the corner. Whether we solve it as part of our research agenda in XReco or someone else in the industry or a secret lab, it will become indistinguishable from reality so from that perspective I would consider the “uncanny valley” problem almost solved.

Related Links:

Infinite Abstract — Larger Than Life Interactive Art.

Thank you to Barnabas for an extensive in-depth and fascinating interview. Please check out the links throughout this interview for details on processes and innovation.

Ginger Liu is the founder of Ginger Media & Entertainment, a Ph.D. Researcher in artificial intelligence and visual arts media — specifically death tech, digital afterlife, AI death and grief practices, AI photography, entertainment, security, and policy, and an author, writer, artist photographer, and filmmaker. Listen to the Podcast — The Digital Afterlife of Grief.